Asking any country to ban weaponry in any regard is a bit naive, but not stupid. It’s naive simply because countries will not just hand over or disarm themselves in order to prevent what COULD happen. Even if that’s not quite what Elon Musk is asking for it is a perception that is bound to be experienced by one or more world leaders. The disarmament of a country is akin to asking it to take a seat and depend on the good will of other countries to do nothing to it. But then again, allowing AI to dictate the flow of battle seems like a poor idea as well.

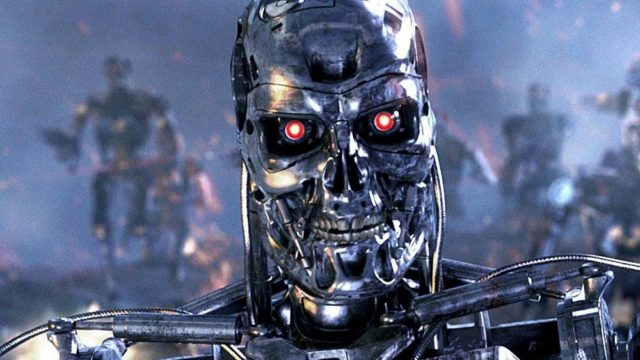

Do people remember the Terminator, or any machine-based movie in which the machines took over? Humanity is so wrapped up in the fact that they can create these technological wonders that the idea of whether they really should doesn’t seem to factor in that much. Technology isn’t bad, but the fact that it’s created by fallible beings is the reason that why it needs to be dialed back a notch. As much as the machines are a creation of mankind, they are also of how humanity really looks at itself and what it thinks about its own species as a whole.

We’re a mess. We war for no better reason than religion and idealism at times, and other times for resources that could possibly be shared if we could find a compromise to do so. Machines will not do that. They will not bicker over this or that unless there happens to be a glitch in the system. And if there is a glitch they will excise it plain and simple. Machines are far more practical in that they do not feel the emotions that go into any action. It is a cause and effect process with machines because they do not possess the same biological processes that cause humans to act in such an erratic fashion.

Machines are not hampered by things such as religion and the need for ideals. They are cold, calculating, and will perform their function as they were created to do. Even if you give AI the chance to think for itself, which is already happening, the chance of finding out that it is friendly towards anything it sees as invasive is impossibly low. Less than one percent most likely. And should it even consider that infinitesimal chance that something could be harmless it will weight the different factors over whether or not the invasive presence is truly necessary for its operation or needs to be removed in order to function properly.

In warfare, that invasive presence is humanity. In one way or another, AI in a wartime situation will be used to eradicate a human presence. Despite the fact that the us vs. them mentality will likely be uploaded into the AI, there is nothing to say that it will not become self-aware at some point in the future and figure out that us vs. them is no longer applicable to humans vs. humans. The distinction between the most destructive and the most logical is likely to cause the AI, in the worst case scenario, to take the us vs. them mentality to mean that it is humans vs. machines.

It sounds a bit more melodramatic than it should, but Elon Musk is not wrong for trying to ban AI weapons.

Follow Us

Follow Us