It is not news that Artificial Intelligence has a growing footprint in the medical field, but what is, is an increasing trend of its apparent ability to pick up the baton where doctors misdiagnose disorders.

Two such cases include a four-year-old who developed an affinity for gnawing on various objects, and a man who had been bitten by an insect and fell ill with the little-known rabbit fever, among others.

Doctors incorrectly diagnosed all of these cases, and so, these patients, desperate in their endeavor for healing, fed their symptoms into ChatGPT.

According to a senior Hartford physician, this could become the norm as all clinicians are taught that a significant part of medicine comes from patients.

One instance was a man who was bitten by an insect and became severely ill

Image credits: Tom Krach/Unsplash

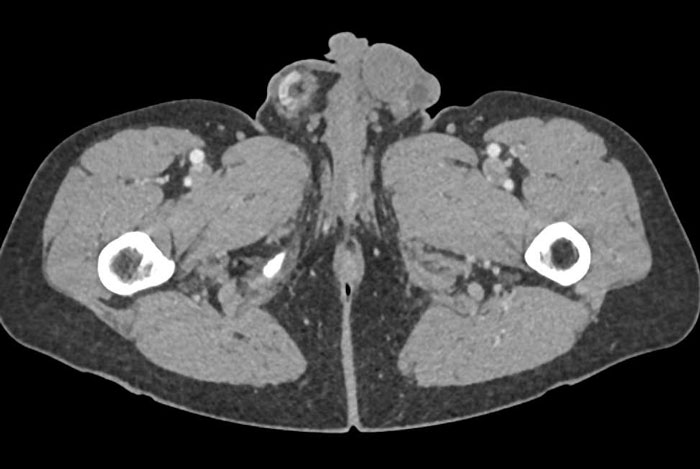

A case study published in February this year reported:

“A 55-year-old patient with no relevant medical history presented to his general practitioner (day 6) with increasing headaches, muscle pain, fever, night sweats, and right-sided inguinal pain for a week.”

The man’s travel and intimate history were considered by the doctors but deemed “unremarkable.”

“Professionally, he has recently been working as an artist, drawing outdoors,” the paper observed.

Doctors gave him antibiotics and discharged him from the hospital after five days

Image credits: Getty/Unsplash (Not the actual Image)

The study further observed that the man had been admitted to the hospital due to “the severity” of his symptoms, and there they discovered an insect bite on his right calf.

Doctors then classified his condition as a febrile viral infection, which occurs when the body reacts to an infection, driving temperatures up to create an environment that is unpleasant for the germs.

The man was placed on intravenous antibiotics and discharged five days later with a bottle of amoxycillin.

Over the next 12 days, his condition worsened despite the oral antibiotics.

Physicians even tested the unnamed 55-year-old man for HIV

Image credits: Curated Lifestyle/Unsplash (Not the actual Image)

His doctor then prescribed him Azithromycin, a broad-spectrum antibiotic used to treat a wide array of disorders, which he took for five days.

Doctors tested for an array of reproductive health infections, including HIV, all of which came back negative.

On day 35 of his ordeal, he was admitted to the Bundeswehrkrankenhaus medical facility in Berlin, where he was set to undergo surgery to remove some of his affected lymph nodes.

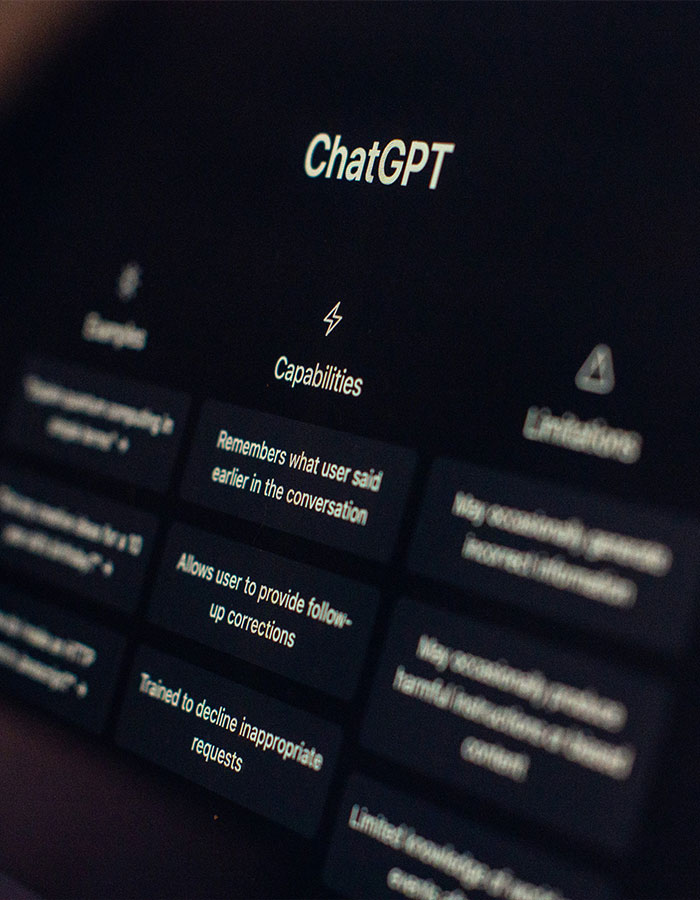

With doctors out of answers, the man turned to Chat GPT

Image credits: Berke Citak/Unsplash (Not the actual Image)

But it was delayed as doctors, after all their tests, were unsure of what was plaguing their patient and now suspected he had an infectious disease.

The patient took matters into their own hands.

“The patient’s daily medical diary allowed for a traceable course of his illness since its onset,” the study observed.

After feeding his symptoms into the ChatGPT engine, the patient learned that he had contracted Tularemia, also known as rabbit fever, from an insect that bit him on his leg.

Courtney and her son even ended up in the ER at one point

Image credits: Courtney/Today

Courtney took her 4-year-old son Alex to see 17 doctors over the COVID period. Alex had been complaining about pain, and at a young age became dependent on pain relievers.

His mother noticed that he developed a propensity to chew on random objects. As such, Courtney’s three years began at a dentist and would frequently visit – at that point – a wilted medical bloc.

“We saw so many doctors. We ended up in the ER at one point. I kept pushing,” she recalled.

“I really spent the night on the (computer) … going through all these things.”

And then, plying Chat GPT with her child’s symptoms, she learned that little Alex had tethered cord syndrome—a condition where the spinal column is abnormally attached to the surrounding tissue.

She took her findings to a new neurosurgeon, and they agreed with her

Image credits: Vitaly Gariev/Unsplash (Not the actual Image)

Image credits: Emiliano Vittoriosi/Unsplash (Not the actual Image)

“I went line by line of everything that was in his (MRI notes) and plugged it into ChatGPT,” she told Today on September 12, 2023.

“I put the note in there about … how he wouldn’t sit crisscross applesauce. To me, that was a huge trigger (that) a structural thing could be wrong.”

Courtney then took her AI diagnosis and Alex to a new neurosurgeon, who agreed with the finding and later operated on the boy to relieve his pain.

The case study says AI has great potential in the medical sphere

Image credits: Annals of Clinical Case Reports

According to the aforementioned case study, it is AI’s ability to learn and then imitate human reasoning, along with its integration into medicine, that made all these diagnoses possible.

“Artificial Intelligence now touches most areas of medicine, from the production of medical goods to diagnostics, treatment, and research in all its facets.”

“Its application holds great potential for rationalization in all areas of applied medicine and in the structural components of the entire healthcare system.”

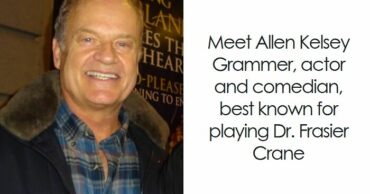

A senior physician at Hartford in Connecticut agrees with the Case Study

Image credits: Karolina Grabowska

Speaking with Dr. Paul Thompson, the chief cardiologist at Hartford in Connecticut, Bored Panda learned that ChatGPT will be beneficial for patients and practitioners moving forward.

“I think AI is going to be useful to both patients and clinicians,” he told Bored Panda exclusively.

“It will all depend somewhat on the personality of the participants.”

But the benefit is not without peril.

AI self-diagnosis has potential because a big part of learning medicine comes through patients

Image credits: Getty Images

“For example, it could be harmful if someone is quite anxious and convinces themselves that they have a terrible disease,” Thompson explains.

“They may not be willing to accept reassurance that they do not have it from someone who is ‘only’ a doctor.”

But another threat may lurk in the egos of “some physicians [who] may be insulted and thereby dismissive of the patient and the AI diagnosis.”

“On the other hand, all clinicians, when honest, are taught medicine in part from the patients.”

“We will still need the humanity of the clinician, however, because patients are not widgets, at least not yet!” he concluded.

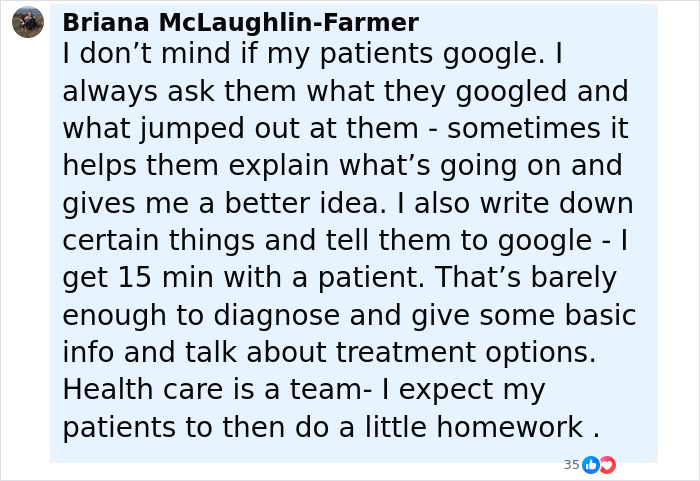

The internet is praising patients for using AI to diagnose their own illnesses

Follow Us

Follow Us